UAV Ground Target Tracking UAV Ground Target Tracking

|

|

|

|

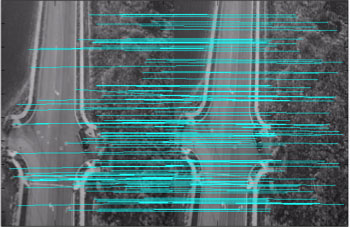

Tracking moving objects with a moving camera is a

challenging task. For unmanned aerial vehicle applications,

targets of interest such as human and vehicles often change their

location from image frame to frame. This paper presents an object

tracking method based on accurate feature description and matching, by

SYBA descriptor, to determine a homography between the previous frame

and the current frame. Using this homography, the previous frame can be

transformed and registered to the current frame to find the absolute

difference and locate the objects. Once the objects of interest are

located, the Kalman filter is then used for tracking their movement.

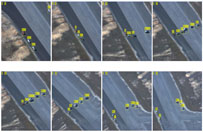

The proposed method is evaluated with three video sequences under image

deformation: illumination change, blurring and camera movement (i.e.

viewpoint change). These video sequences are taken from unmanned aerial

vehicles (UAVs) for tracking stationary and moving objects with a

moving camera.

|

|

Graduate Students:

|

Alok Desai

|

Publications:

-

A. Desai and D.J. Lee, “An Efficient Feature Descriptor for Unmanned Aerial Vehicle Ground Moving Object Tracking,” AIAA Journal of Aerospace Information Systems, vol. 14/6 : p. 345-349, June 2017. (SCIE)

-

A.

Desai, D.J. Lee, and M. Zhang, “Using Accurate Feature Matching

for Unmanned Aerial Vehicle Ground Object Tracking,” Lecture

Notes in Computer Science (LNCS), International Symposium on Visual

Computing (ISVC), Part I, LNCS 8887, p. 435–444, Las Vegas, NV,

U.S.A., December 8-10, 2014.

|

|

(Click

image to view.)

|

|

|